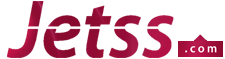

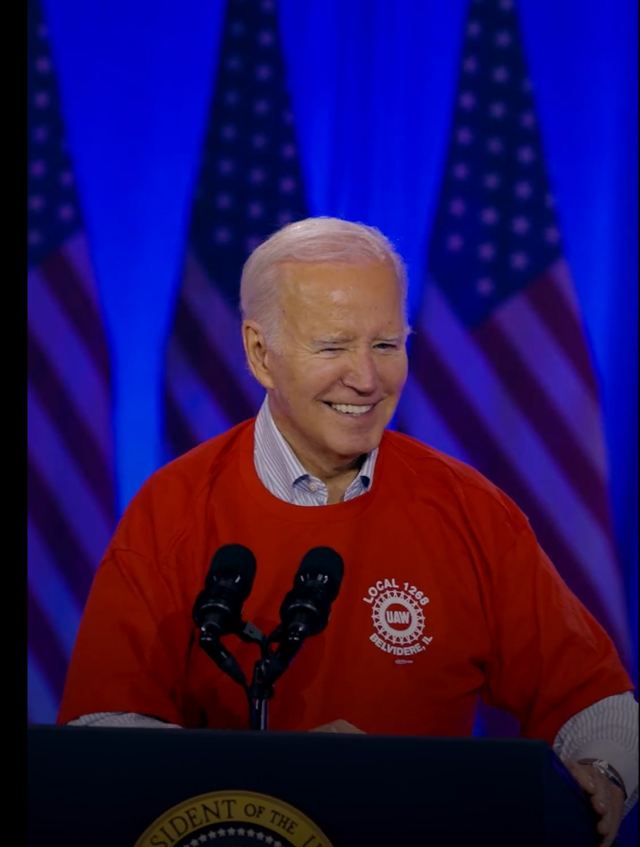

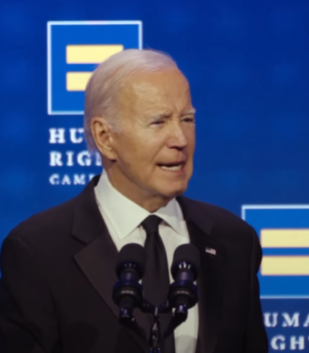

President Joe Biden signed a sweeping executive order to regulate the development and use of Artificial Intelligence (AI). This measure includes early oversight of large AI models, such as OpenAI’s GPT-5, before implementation. Tech companies are praising new regulations that aim to govern the federal government’s use of AI, establishing guidelines for companies developing new models.

The executive order requires AI models that may pose risks to national security to be disclosed to the government, including details about protective measures. Companies must report to the government on the development of large-scale AI systems, sharing independent test results to ensure safety. These regulations apply especially to models that have not yet been released, such as the highly anticipated GPT-5.

The order aims to hire AI experts for the federal government, reduce barriers for immigrant workers in the sector, and evaluate the implementation of AI by the Departments of Defense and Justice. Federal agencies will conduct assessments of major AI models and programs to prevent discrimination, security risks, and their use in military contexts. This initiative represents the Biden administration’s most comprehensive attempt to establish functional barriers to AI development, consolidating the United States as a policy leader for the sector. Despite praise from AI startup leaders, there are concerns about the potential consolidation of power for industry giants. The order also seeks to combat AI fraud such as deepfakes by instructing federal agencies to use watermarks and content authentication tools to label AI-generated material.

This executive order reflects a global trend towards regulating AI, with the European Union moving closer to introducing the world’s first AI laws. Companies like Google, OpenAI, and Nvidia support regulatory initiatives, while AI industry leaders actively collaborate with governments to shape their regulations. Biden’s action comes in an international context in which several countries seek to restrict the use of AI, responding to concerns about security and ethics.